Overview

Beta

This Kubeflow component has beta status. See the Kubeflow versioning policies. The Kubeflow team is interested in your feedbackabout the usability of the feature.What is Katib ?

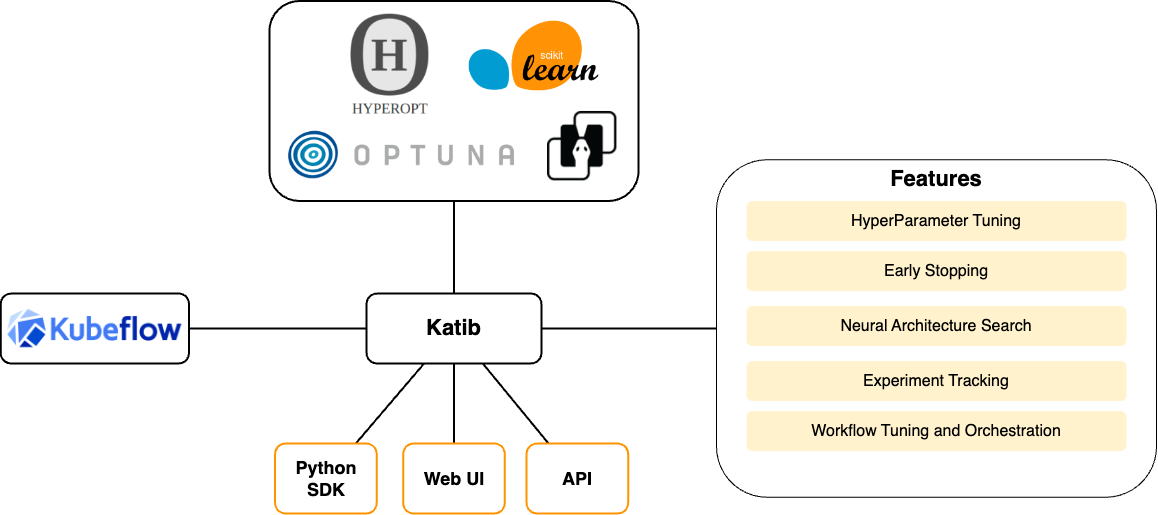

Katib is a Kubernetes-native project for automated machine learning (AutoML). Katib supports hyperparameter tuning, early stopping and neural architecture search (NAS). Learn more about AutoML at fast.ai, Google Cloud, Microsoft Azure or Amazon SageMaker.

Katib is the project which is agnostic to machine learning (ML) frameworks. It can tune hyperparameters of applications written in any language of the users’ choice and natively supports many ML frameworks, such as TensorFlow, MXNet, PyTorch, XGBoost, and others.

Katib supports a lot of various AutoML algorithms, such as Bayesian optimization, Tree of Parzen Estimators, Random Search, Covariance Matrix Adaptation Evolution Strategy, Hyperband, Efficient Neural Architecture Search, Differentiable Architecture Search and many more. Additional algorithm support is coming soon.

The Katib project is open source. The developer guide is a good starting point for developers who want to contribute to the project.

Why Katib ?

Katib addresses AutoML step for hyperparameter optimization or Neural Architecture Search in AI/ML lifecycle as shown on that diagram:

- Katib can orchestrate multi-node & multi-GPU distributed training workloads.

Katib is integrated with Kubeflow Training Operator jobs such as PyTorchJob, which allows to optimize hyperparameters for large models of any size.

In addition to that, Katib can orchestrate workflows such as Argo Workflows and Tekton Pipelines for more advanced optimization use-cases.

- Katib is extensible and portable.

Katib runs Kubernetes containers to perform hyperparameter tuning job, which allows to use Katib with any ML training framework.

Users can even use Katib to optimize non-ML tasks as long as optimization metrics can be collected.

- Katib has rich support of optimization algorithms.

Katib is integrated with many optimization frameworks such as Hyperopt and Optuna which implements most of the state of the art optimization algorithms.

Users can leverage Katib control plane to implement and benchmark their own optimization algorithms

Next steps

Follow the installation guide to deploy Katib.

Run examples from getting started guide.

Feedback

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.